Writing as a Signal

Value is linked to scarcity. Signals of value are indirectly signals of scarcity. If AI makes the provision of knowledge work abundant, then what happens to its value? If we hold the former statements as true we must conclude that the value of knowledge work diminishes. Where then does value reside? How do we signal this value?

Before forming AragoAI, I led the AI team at a two sided marketplace for human labour with over 70m users. In addition to this role, I operated as the group's academic research coordinator. We collaborated with several universities to conduct novel research on labour market dynamics. Notable to this discussion are independent collaborations with researchers from Princeton, Dartmouth and Yale.

Making Talk Cheap: Generative AI and Labor Market Signalling (Silbert & Galdin, 2025) explored the value of writing as a signal pre and post LLMs. They argue pre LLMs worker's text based bids were a Spence like signal of value for which buyers could hire based on. Namely, creating customised text bids for each job was a costly endeavour, that indirectly signalled a sellers ability to complete a job. LLMs eroded the value of this signal by diminishing the effort required. In the framing we posed at the start of this article, I would say it reduced the scarcity of customised bids, thereby their value.

In their paper, Jesse and Anaïs estimated an equilibrium model for labour market signalling on the platform and used this to simulate counterfactuals. One of which involved assuming a complete erosion of text based signalling value, due to LLMs lowering the writing cost of bids to zero. They identified a reduction in seller and overall welfare but virtually no effect on buyer welfare, ceteris paribus. This was driven by a diversion of hiring from high-ability to low-ability workers. Buyer welfare was compensated for this diversion by the reduction in cost of labour. They conclude:

These results imply that many markets that rely on costly written communication may face significant welfare and meritocratic threats from generative AI's ability to cheaply produce expertly-written text. Either firms within or designers of these markets may seek to mitigate these threats by investing in more effective screening technology that cannot be gamed by generative AI, or by redesigning labor contracts to incentivize more exploratory short-term hiring that allows employers to learn about workers' abilities on the job, rather than relying on pre-hire signals.

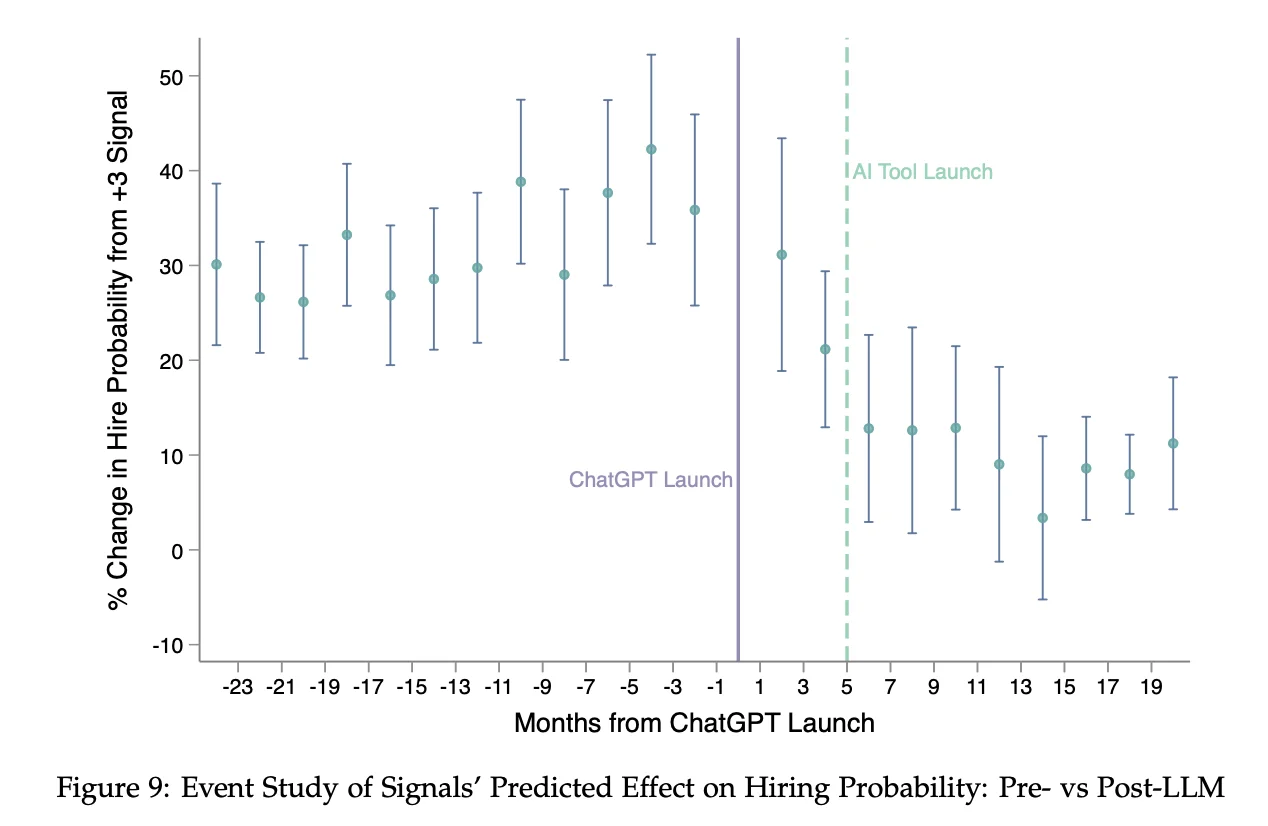

Signalling in the Age of AI: Evidence from Cover Letters (Cui, Dias & Ye, 2025) provided complementary research on the platform. They conclude:

While AI tools allow freelancers to produce more polished and tailored applications with less effort, our findings suggest that they fundamentally reshape how employers interpret cover letters. The widespread adoption of AI-assisted writing diminishes the informational value of cover letters, weakening their role as a hiring signal. In response, employers place greater weight on alternative signals, such as past work experience, that are less susceptible to AI.

Our claim that value, and signals of value, are tied to scarcity is clearly reductive but it provides a valuable lens for considering the future of knowledge work. In the case of the above, written Bids, are not inherently valuable, they were a proxy, when LLMs invalidate that proxy, the market must turn to price discrimination or other signals. How much of knowledge work is fundamentally similar? We must separate value from medium, quality from quantity. In a future where report generation is commodified, the value of each will reduce.

This is ok. The value of knowledge work should be tied to that which it enables, not its existence. This is why metrics like how many lines of code a developer writes is particularly misleading in a post LLM world. A report is valuable if it uncovers insights that may now, or in the future be acted upon (ignoring deeper philosophical discussions of knowledge for knowledge's sake). The role of the knowledge worker may shift to that of determining what should be done. To allocation of compute and the creation of scaffolds within which AI systems can operate.

It is not clear whether humans will always be required for this role. One can imagine a future where AI systems are capable of also making these decisions. The success of Reinforcement Learning from Verifiable Rewards for math and coding provides a glimpse into this future. For knowledge work, the challenge, for now, is that defining verifiable rewards is non trivial. The shape of these rewards unclear. This problem does not seem insurmountable.